Diver accepts input from many sources, but did you know that you can screen scrape information from the Web to use as input? For example, do you want to integrate daily exchange rate data, weather data, or transportation schedules into a model or cBase?

Diver accepts input from many sources, but did you know that you can screen scrape information from the Web to use as input? For example, do you want to integrate daily exchange rate data, weather data, or transportation schedules into a model or cBase?

Paul Duggan, Software Development Manager at Dynamic Business Informatics Ltd. (DBI), recently presented a method to accomplish just this at our annual distributors’ conference. With a few steps, you can pull data from the web. In short, you can use a Google sheet to import the web page data, download the shared Google sheet to a local .csv file, and use the .csv file as input to Diver. Sound simple? Let’s give it a try!

Prerequisites

To create and share Google spreadsheets, you’ll need a Google account. If you don’t have one, sign up for this free account (or check if you already have one) here: https://support.google.com/accounts/answer/27441?hl=en

To use this method to download the data from a web page URL, the data must be contained in the page source in HTML table or list format. You can use your browser to view the HTML page source. (Check your browser documentation for how to view the HTML source. Some browsers have a menu option; other browsers use right mouse clicks or control key combinations.) With the HTML source open, check for <table> or <list> tags. If you find the data that you want in a table or list, you are good to go. If not, this method will not work to retrieve the data.

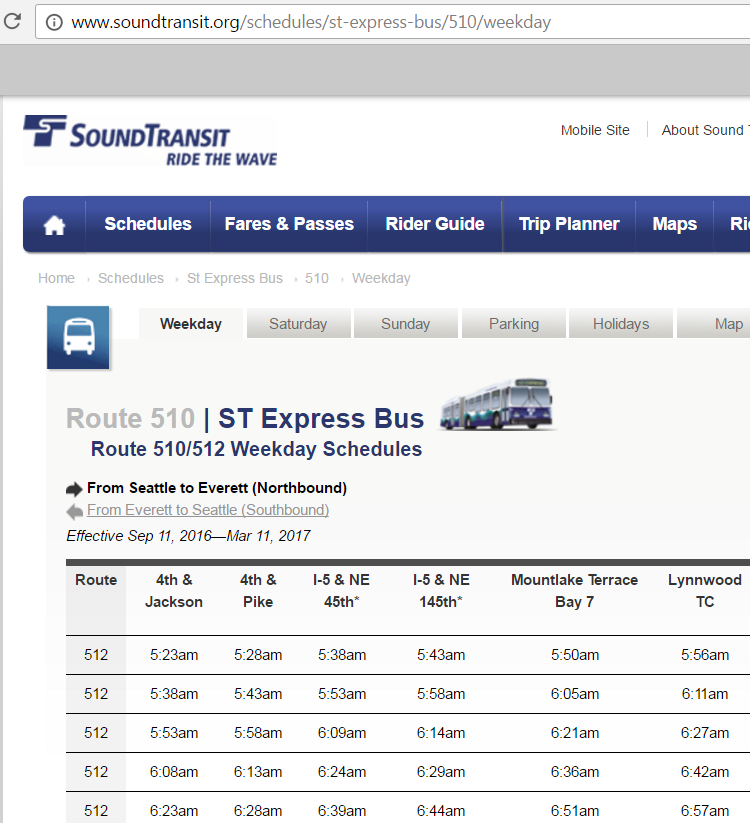

For example, compare the source of these two pages. You should see that the Seattle bus schedule is enclosed in <table> tags. However, the BART schedule is populated with scripting, so the HTML table is not hardcoded. Therefore, the BART schedule cannot be retrieved with this method.

- Seattle SoundTransit bus schedule, Route 510: https://www.soundtransit.org/ride-with-us/routes-schedules?route=510

- San Francisco Bay Area Rapid Transit District (BART), Lafayette Station schedule: http://www.bart.gov/stations/lafy/schedule

To transfer the Google sheet to a local file in .csv format, you’ll need a utility, such as curl or GNU Wget, to transfer files over HTTPS. If you have another favorite file transfer utility, feel free to use it. Check these sites to get more information and download one of the utilities:

- curl: https://curl.se/

- GNU Wget: https://www.gnu.org/software/wget/

Now that we have the prerequisites covered, here are the steps:

Step 1: Identify the URL of the data you want to import

For this example, we’ll use the URL for Seattle SoundTransit weekday schedule for Route 510: https://www.soundtransit.org/schedules/st-express-bus/510/weekday

The weekday tab of the bus schedule displays in table format on the web page.

And the schedule data is included in a <table> in the HTML source, as you can see in this code snippet:

<table class=”timeTable” id=”timeTable”><thead><tr class=”headers”><th class=”headerData”>Route</th>

<th class=”headerData”>4th & Jackson</th>

<th class=”headerData”>4th & Pike</th>

<th class=”headerData”>I-5 & NE 45th*</th>

<th class=”headerData”>I-5 & NE 145th*</th>

<th class=”headerData”>Mountlake Terrace Bay 7</th> …

Step 2: Create a Google sheet

We’ll create a blank Google sheet to import the bus schedule into. Log into your Google account, navigate to spreadsheets, and create a new, blank spreadsheet: https://www.google.com/sheets/about/

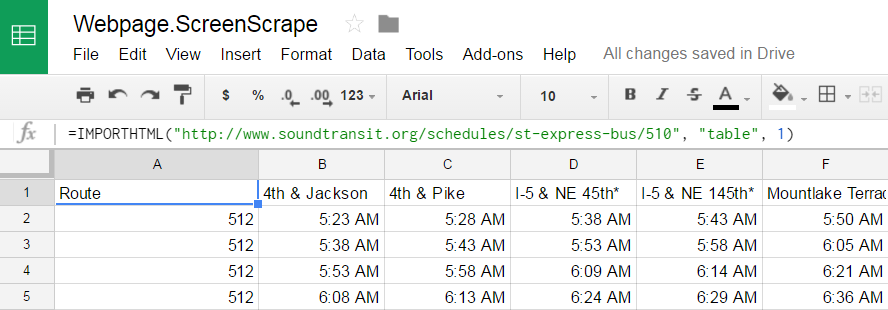

Step 3: Import the data into the Google sheet

To import the bus schedule, we’ll use a Google sheet function, IMPORTHTML. Put your cursor in spreadsheet cell A1, and enter the function with the following parameters:

=IMPORTHTML(“<url>“, “<keyword>“, <num>)

Where:

- <url> is the URL including the protocol “https://“ for the data you want to import (from Step 1). Enclose the URL in quotation marks.

- <keyword> is either “list” or “table” from the HTML source structure.

- <num> is the number of the occurrence, starting at one (1), of the list or table in the HTML source.

For example, to import the first table in the Seattle bus schedule HTML source, you would enter the following function:

=IMPORTHTML(“https://www.soundtransit.org/schedules/st-express-bus/510”, “table”, 1)

After a brief time to load, your spreadsheet should populate with the data from the URL.

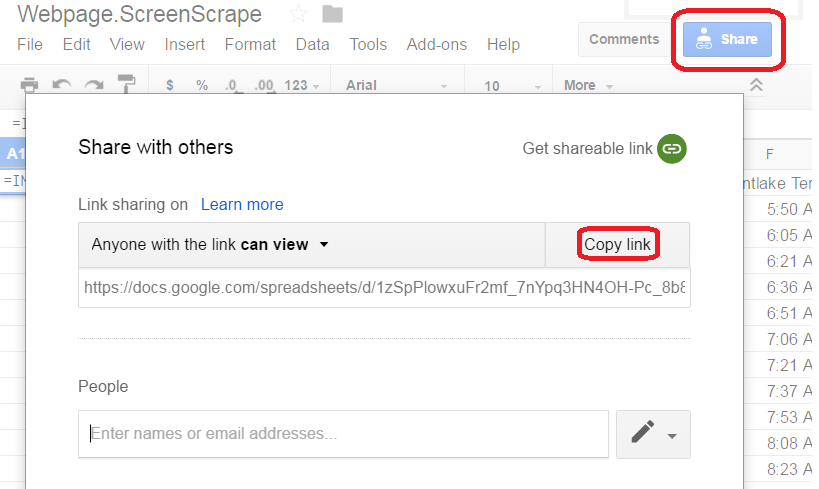

Step 4: Create a shareable link for the Google sheet

Now, you want to share the spreadsheet so that you have the spreadsheet ID and you can download it. Share the spreadsheet, and copy the link with the sheet ID.

Step 5: Use GNU Wget or curl to export the Google sheet data to local file

With the URL of the shared Google sheet, we can use a command line tool, curl or Wget, to download the data as a .csv file. Both curl and Wget have lots of optional parameters you can play around with, but the important parameters to specify are the source location of the shared Google sheet and a target location and file type for the downloaded file.

For curl:

Here’s the command syntax:

C:\curl\src>curl –output <output> https://docs.google.com/spreadsheets/d/<sheetID>/export?gid=X&format=csv

Where:

- –output <output> gives the drive and file name of the output file

- <sheetID> is the Google sheet ID copied from the sharing URL link

- gid=X specifies which tab, starting with zero (0), of the Google sheet has the data

- format=csv specifies that the output file is in .csv format

Here’s the curl command to download our Seattle bus schedule example:

C:\curl\src>curl –output c:\test\output.curl.csv “https://docs.google.com/spreadsheets/d/1zSpPlowxuFr2mf_7nYpq3HN4OH-Pc_8b8liNoNThPY0/export?gid=0&format=csv”

For Wget:

If you prefer Wget, here’s the command syntax:

C:\wget>wget –output-document=<output> https://docs.google.com/spreadsheets/d/<sheetID>/export?gid=X&format=csv

Where:

- –output-document=<output> gives the drive and file name of the output file

- <sheetID> is the Google sheet ID copied from the sharing URL link

- gid=X specifies which tab, starting with zero (0), of the Google sheet has the data

- format=csv specifies that the output file is in .csv format

Here’s the Wget command to download our Seattle bus schedule example:

C:\wget>wget –output-document=c:\test\output.wget.csv https://docs.google.com/spreadsheets/d/1zSpPlowxuFr2mf_7nYpq3HN4OH-Pc_7b8liNoNThPY0/export?gid=0&format=csv

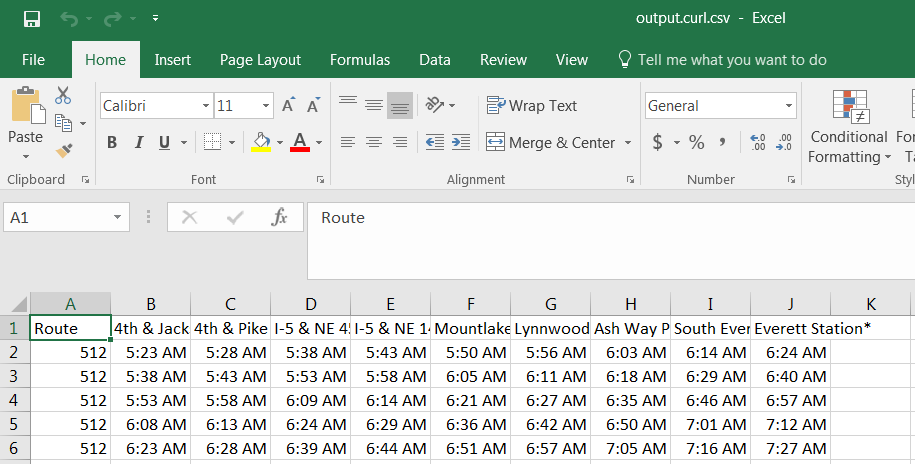

Whichever file transfer tool you use, you now have the web page data downloaded to a local .csv file. You can open the .csv file to check that the data is there.

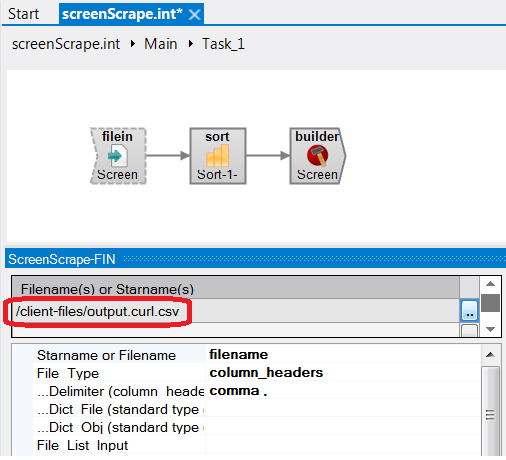

Step 6: Use the local file as the file in input to a model or cBase build

Now that you have the web page data in a local .csv file, you can use it as Diver input. You can move the .csv file to the /client-files/ or other directory to use as the file in to your Integrator script. Define the dimensions, run the script, and you have a model with the web data as input!

- How to Access Dimensional Insight Online Help - May 3, 2018

- Learn about Chart Portlet and the Future of Dimensional Insight Visualizations - September 13, 2017

- How to Use Map Portlet to Visualize Geographic Data - August 10, 2017